Yes, okay, sure, but what is Cybernetics?

Yesterday, a conversation with a friend veered into the convoluted history of post-WWII scientific and technological development, and the various characters that drove it. During the conversation, we unavoidably veered into cybernetics, that long forgotten systematization of theories around feedback and control systems, which at the time appeared so promising, but are now at best ignored, and at worst reviled.

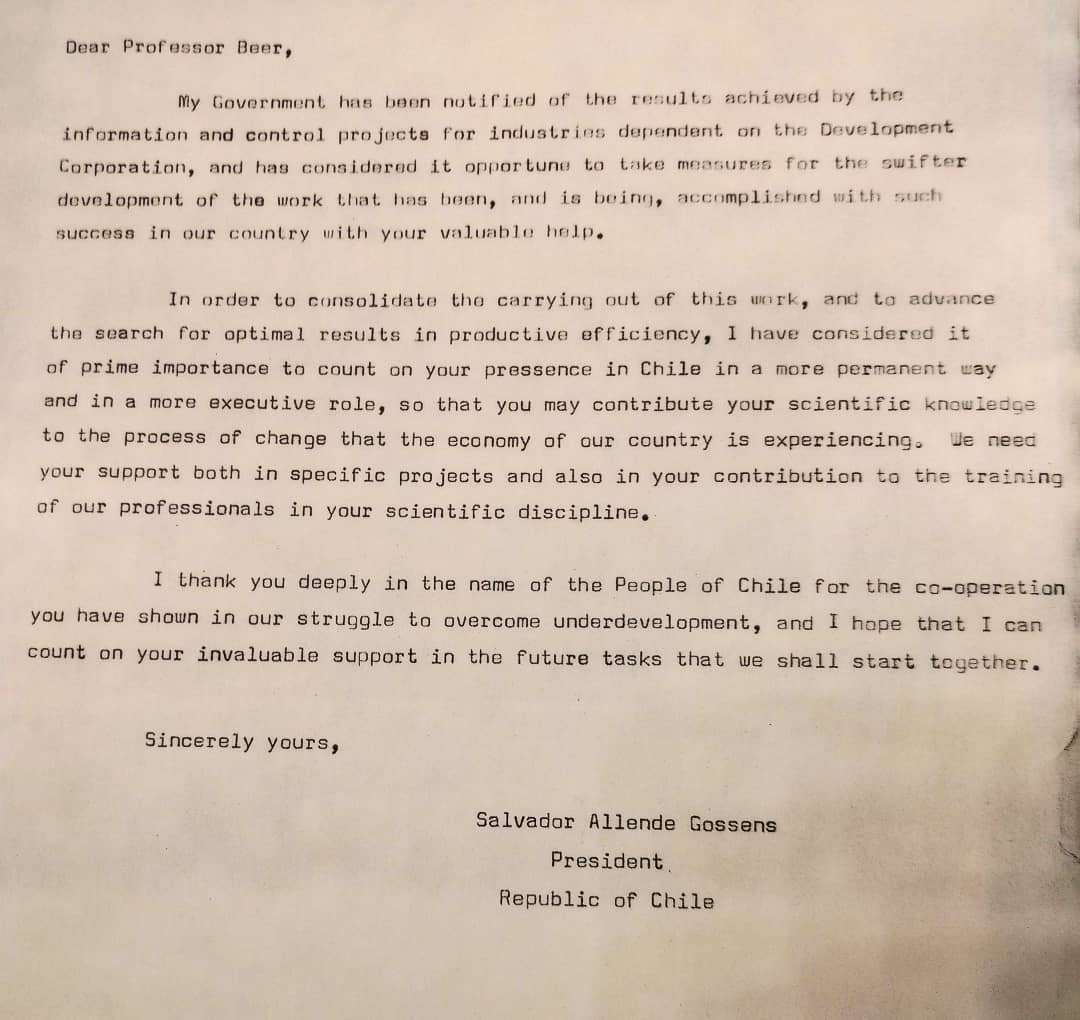

When reading the history of cybernetics, it is hard to not get swept up by the eager and earnest romanticism of it, and the tenderness with which the various characters discuss the ideas. When I visited the Chilean National Archives some years back, and was allowed to carouse through the few boxes of documents saved from the Cybersyn project, I was surprised to see how unclear the line was between serious technical specifications with specific calculations, and the heartwarming attestations of how much the various actors cared about the work being done and really deeply believed in its importance.

Now, some decades later, there are few left who openly discuss cybernetics as a serious field. Not because it doesn’t warrant serious discussion, in my opinion, but rather because the more practical portions of it have been parceled off into other fields: operations research, computer science, artificial intelligence, cognitive science, neuroscience, behavioral psychology, and more. Most of these fields have their own distinct histories, but it’s truly fascinating, carousing through these fields, to see ideas referenced without references to their origins, or central characters to cybernetics popping up in an altogether different context.

Some of this is, unfortunately, an illusion. The real influence of cybernetics as a field appears to have waned as early as the mid-1980’s. No psychologists today remember Ashby, no students of management learn of Stafford Beer, and surprisingly few of those who deal with machine learning or “artificial intelligence” today have ever heard of Norbert Wiener. Indeed, the Wikipedia page on artificial intelligence doesn’t mention him at all, saying only that:

The Church-Turing thesis, along with concurrent discoveries in neurobiology, information theory and cybernetics, led researchers to consider the possibility of building an electronic brain.[21] The first work that is now generally recognized as AI was McCullouch and Pitts' 1943 formal design for Turing-complete “artificial neurons”

When access to digital computers became possible in the mid-1950s, AI research began to explore the possibility that human intelligence could be reduced to step-by-step symbol manipulation, known as Symbolic AI or GOFAI. Approaches based on cybernetics or artificial neural networks were abandoned or pushed into the background.

I suppose it is some relief that they mention McCullouch and Pitts at least. But so much appears lost to history – not that it isn’t well documented, but rather, it is simply not discussed much.

Modern cyberneticists do exist. I had the great joy some years ago to lecture, alongside Harry Halpin, at a masters class under professor Francis Heylighen at the Vrije Universiteit Brussel. Our talk was, to be honest, a bit scattershot, as a rough attempt at outlining how cybernetic theory was applicable to governance. But it was a fantastic honor to be invited to do the session, in particular because I had already read so many of Heylighen’s papers with great interest. I also had the joy of reading Carlos Gershenson’s Ph.D. thesis. A former student of Heylighen’s, Gershenson’s thesis holds a lot of interesting ideas about self-organization that might be relevant in other fields today – although since 2007 there have been enough advances in machine learning to overshadow most of that work.

And there are others hiding in the woodwork. In fact, I recently stumbled into this topic while having a beer with a computer science professor of my acquaintance, only to discover that he is quite familiar with the field but apparently never brings it up, because it’s just too weird for most people. Fair.

But back to the question

During last night’s conversation, my friend made the observation that cybernetics feels conceptually out of reach. “What is it, exactly?”

Here we could trot out Norbert Wiener’s original definition, as “the science of communications and automatic control systems in both machines and living things.”

Or, perhaps better is Margaret Mead’s observation of it being “a form of cross-disciplinary thought which made it possible for members of many disciplines to communicate with each other easily in a language which all could understand.”

Wikipedia cites those and numerous other definitions:

- Ross Ashby: “the art of steermanship”

- Gregory Bateson: “a branch of mathematics dealing with problems of control, recursiveness, and information, focuses on forms and the patterns that connect”

- Andrey Kolmogorov: “the study of systems of any nature which are capable of receiving, storing, and processing information so as to use it for control”

- Gordon Pask: “the science or the art of manipulating defensible metaphors; showing how they may be constructed and what can be inferred as a result of their existence”

… there are others, but I think the range of these kind of speaks to the point, that cybernetics does in fact feel conceptually out of reach.

Not (exactly) Science

Delving into the epistemology of cybernetics may yield better results. It was understood even early on that cybernetics was a good conceptual framework for thinking about problems where scientific method wasn’t performing very well. In fact, I don’t think it’s entirely ahistorical to say that this curiosity was what prompted the Macy conferences in the first place.

Nowadays, people are quite used to the idea of torturing statistical methods in order to produce statistically significant results from piles of data. In fact, we’ve built entire economies on this very concept. But if we think foundationally about scientific method – the method itself, not just the abstract concept of “science” which people take so lightly nowadays – there is a certain set of rules which are largely ignored by the majority of scientific papers published.

In particular, scientific method requires a hypothesis, which can be tested with an experiment, the data from which can be analyzed, and the hypothesis then accepted or rejected. Except, we’ve found that as the questions become more complex, the experiments more elaborate, and the data more voluminous, we end up having to rely on methods requiring multiplexed hypotheses, statistical wizardry, and more handwaving than should really be tolerated in an emperical field.

This tendency has yielded practices such as “p-hacking”, data dredging, cherry picking, post-hoc analysis and other practices which are metascientific at best and falacious on average. One good warning sign of this is when data scientists engage in “Exploratory Data Analysis” (EDA) prior to forming hypotheses. It’s not that EDA is a bad practice – far from it, it’s often necessary in order to understand the biases and limitations of the data collection methods and the resulting data itself. But attacking a data set without an inciting conjecture is not, strictly, science.

And yet, it is done. In part because many simply don’t know any better; in part because of the organizational incentives and pressures in academia; in part due to an acknowlegement that things have simply become so complicated that there are no other viable strategies in many cases.

But I think the most important reason is that there is simply so much data, and so many strong indications that there are patterns hiding in the data waiting to be understood, and that analyzing these patterns may yield great insights into the nature of reality. And so people get lost in the data, blinded by insights that there is no theoretical framework to discuss in context. Or, if there is one, it is underspecified and made muddy by the avalanche of new ideas.

Absorption of Complexity

One of the most important ideas I have taken from cybernetics is what is commonly referred to as Ashby’s law: variety consumes variety. Or, put more simply: For one system to exert control over another, the controller must exhibit at least as much variety as the controlee. For instance, an aircraft operates in three dimensions, and so the control inputs for the aircraft must support control over three dimensions. The details of the mapping do not matter quite as much as the fact that there is an equal amount of variety. Thus the controls of a helicopter can be different from the controls of a glider, but the control systems of both vehicles have enough variety to consume the variety of the environment the vehicle operates in.

Stafford Beer, in his paper “The Viable System Model: Its Provenance, Development, Methodology and Pathology”, points out many ways in which this law prescribes limitations:

It has always seemed to me that Ashby’s Law stands to management science as Newton’s Laws stand to physics; it is central to a coherent account of complexity control. “Only variety can destroy variety.” People have found it tautologous; but all mathematics is either tautologous or wrong. People have found it truistic; in that case, why do managers constantly act as if it were false? Monetary controls do not have requisite variety to regulate the economy. The Finance Act does not have requisite variety to regulate tax evasion. Police procedures do not have requisite variety to suppress crime. And so on.

Scientific method has been a remarkably powerful tool through the years, but what the Macy conferences alluded to and numerous writings have shown, scientific method is at its best at the times when there are relatively few variables, and they are largely linear. The more nonlinear the described system becomes, the harder it gets to apply scientific method. Similarly, when the degrees of freedom grow into the thousands, scientific method struggles to maintain predictive capacity. Now, to be clear, this is not to say that scientific method fails at these. On the contrary – statistical methods such as generalized linear models, machine learning methods such as k-means classifiers and support vector machines, and numerous other developments have extended the reach of science far into the murky depths of high dimensional nonlinear systems.

But what I’d like to argue is that there was, at some point in the 1970’s, a hint at some other kind of epistemological method, that didn’t perform better than science at low-variety systems, and possibly turned out to be more confusing in such settings, but was more flexible or at least more predictive than scientific method in these high-dimensionality or extreme nonlinear settings. And while what precisely that methods is never got formally stated, implications that were starting to emerge from the work leading up to it were absorbed all throughout science.

This might seem like an extraordinary claim, but let’s observe that there are in practice, throughout industry and academia, dozens of epistemological methods in practice that are emphatically not scientific. Anthropology is the easy example, where certainly hypotheses exist, but the qualitative nature of ethnographic research does not lend itself to the same structure of hypothesis testing as is strictly required under scientific method. This is not to say that scientific research doesn’t occur under anthropology, but rather to say that anthropology as a field is epistemologically sound even when it does not employ the scientific method.

Not all knowledge must be tested scientifically for it to be knowledge; but all knowledge must be tested scientifically for it to be science.

So why would it be so outlandish to think that another method could operate better than scientific method in a realm that scientific method performs poorly at? There’s a reason why ethnography is such a powerful tool.

(And this is a good place to mention that mathematics is not a science, although it is a fantastically powerful philosophy with which to conduct science.)

So what then is cybernetics?

Okay okay, this is getting long, and it is impossible to even begin to discuss this without stepping onto a philosophical mine field where every statement is potentially contentious. But let me attempt a definition, and make a suggestion:

Cybernetics is a theoretical framework for absorbing complexity, for the purpose of observing, analyzing, and interacting with systems which possess interactions which are too complex to be studied in detail.

This is probably a bad definition, but I think it covers what I am alluding to at least in part.

Cybernetics as a field has all but died out, because the easily comprehended findings were subsumed into other fields of study, and what remained became a stomping ground for weirdos, romantics, and aloof visionaries. However, there appears to have been something there, something that many people have been picking at for decades now.

It took the better part of a century to formalize science under figures such as Newton, Hooke, Brache, Darwin and others. Mathematics was effectively reinvented at the beginning of the 20th century in its current form, after a series of world-shattering discoveries ranging from Leibniz to Gödel and beyond. We know that knowledge can be expanded and we know that new epistemological systems can be found, in particular because we keep finding new ones.

So here is the suggestion: Let’s try to figure out if the theoretical framework for complexity represented by cybernetics actually still has something significant to offer. It might not. But it’s worth checking. Right? Exploring ideas is fun anyway.

Reading list

So, in order to facilitate this exploration, here’s some “essential readings” on the topic, both new and old. Some are referenced in the above text but I compiled them for convenience.

This is a mixed bag of theory, history, and other. They’re, at least to begin with, in an order I can recommend. I should say that while I’ve read many of these, I have not read all of them. In part, compiling this list is for the purposes of padding my already overflowing tsundoku. Enjoy.

- Cybernetics: or Control and Communication in the Animal and the Machine, Norbert Wiener.

- The Human Use of Human Beings, Norbert Wiener.

- An Introduction to Cybernetics, Ross Ashby.

- The Cybernetics Group, Steve Joshua Heims. (PDF)

- Cybernetic Revolutionaries, Eden Medina.

- The Cybernetics of National Development, Stafford Beer.

- Fanfare for Effective Freedom, Stafford Beer.

- Design and Control of Self-Organizing Systems, Carlos Gershenson.

- “The Viable System Model: Its Provenance, Development, Methodology and Pathology”, Stafford Beer.

- Interactions of Actors Theory, Gordon Pask.

- The Cybernetic Hypothesis, Tiqqun

- “Russian Scandals”: Soviet Readings of American Cybernetics in the Early Years of the Cold War, Slava Gerovitch

- The Phenomenon of Science: a cybernetic approach to human evolution, Valentin Turchin

- Understanding Understanding: Essays on Cybernetics and Cognition, Heinz von Foerster

- Understanding Systems: Conversations on Epistemology and Ethics, Heinz von Foerster

- Cybernetics and Second-Order Cybernetics, Francis Heylighen and Cliff Joslyn

- The Science of Self-organization and Adaptivity, Francis Heylighen

- Accelerating Socio-Technological Evolution: from ephemeralization and stigmergy to the global brain, Francis Heylighen

- The Global Superorganism: an evolutionary-cybernetic model of the emerging network society, Francis Heylighen